9 August 2022

Coding teaser

Javascript coding teaser I came across

Can you spot the bugs? How could you make the function faster?

(() => {

const user = fetch(`/api/loggedInUser`);

const comments = await fetch(`/api/users/${user.id}/comments`);

const books = await fetch(`/api/users/${user.id}/books`);

console.log(books, comments);

})();

Bugs

Pasting that code into a js file and running it creates the first problem.

The await keyword only makes sense in a async context. So we make the anonymous function async by adding the async keyword.

The also the await on the logged in user fetch is missing.

(async () => {

const user = await fetch(`/api/loggedInUser`);

const comments = await fetch(`/api/users/${user.id}/comments`);

const books = await fetch(`/api/users/${user.id}/books`);

console.log(books, comments);

})();

Next let's use setTimeout to fake our API, since I am too lazy to create one.

// time is the simulated fetch response time in milliseconds

const fakeFetch = (result, time) => {

return new Promise((resolve) => {

setTimeout(() => {

resolve(result);

}, time);

});

};

await (async () => {

const user = await fakeFetch({ id: 69, name: "tnecniV" }, 300);

const comments = await fakeFetch(`${user.id}-comments`, 700);

const books = await fakeFetch(`${user.id}-books`, 500);

console.log(books, comments);

})();

Note: I am using top-level

awaithere, available in Bun or Deno

Performance

We run a simple time, to see how long it takes:

> time bun run teaser.js

69-books 69-comments

bun run teaser.js 1.82s user 1.19s system 198% cpu 1.516 total

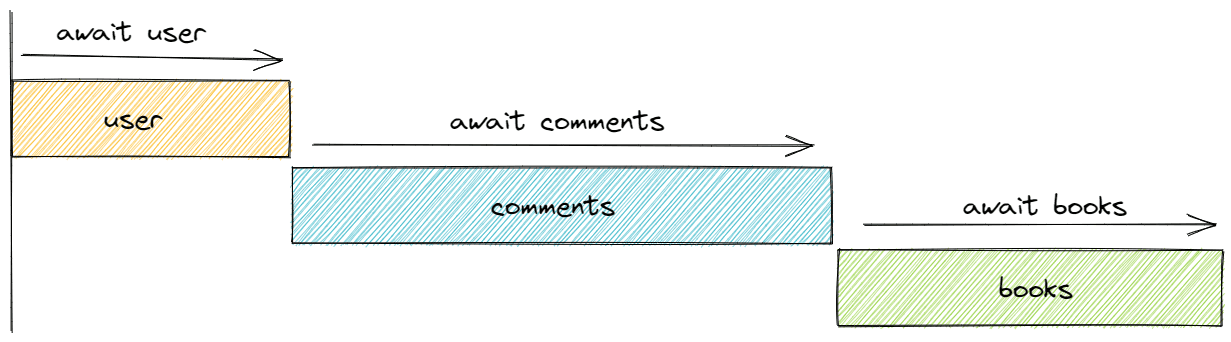

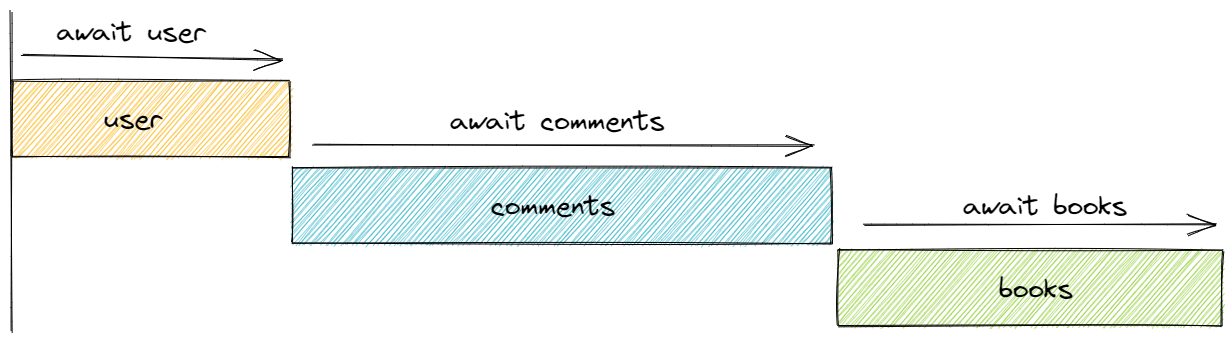

About 1.516 seconds. That is almost exactly all our response times added together. But why does it take so long? Let's illustrate the waterfall:

We run every request one after another, even though not every request depends on the previous. Since both comments and books depend on the user ID and are not dependent on each other we can await those requests in parallel using Promise.all.

await (async () => {

const user = await fakeFetch({ id: 69, name: "Tnecniv" }, 300);

const [comments, books] = await Promise.all([

fakeFetch(`${user.id}-comments`, 700),

fakeFetch(`${user.id}-books`, 500),

]);

console.log(books, comments);

})();

time it again:

> time bun run teaser.js

69-books 69-comments

bun run teaser.js 1.21s user 0.80s system 198% cpu 1.018 total

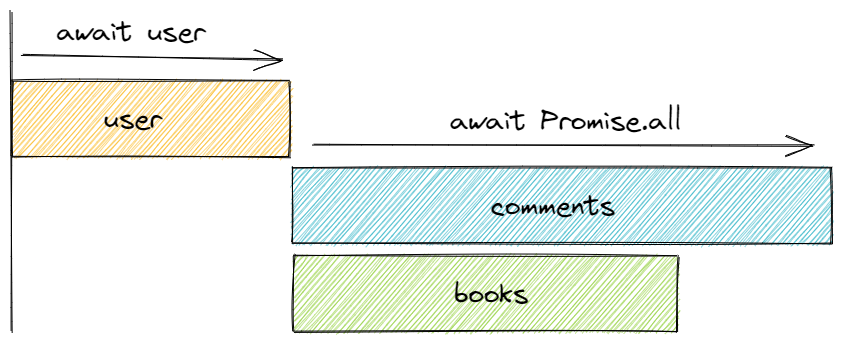

1s = 300ms + 700ms! Promise.all resolves when the last Promise in the input array resolves. The updated waterfall looks something like this:

In this scenario we cut our runtime by 1/3.

Benchmarking

Let's make it a bit more interesting by adding more request and randomizing the API response time:

const getRandom = (min, max) => {

return Math.floor(Math.random() * (max - min + 1)) + min;

};

await (async () => {

const user = await fakeFetch(

{ id: 69, name: "Tnecniv" },

getRandom(100, 500)

);

const [comments, books, cats, dogs] = await Promise.all([

fakeFetch(`${user.id}-comments`, getRandom(500, 1000)),

fakeFetch(`${user.id}-books`, getRandom(500, 1000)),

fakeFetch(`${user.id}-cats`, getRandom(500, 1000)),

fakeFetch(`${user.id}-dogs`, getRandom(500, 1000)),

]);

console.log(books, comments, cats, dogs);

})();

Now we benchmark:

> hyperfine 'bun run teaser-extended.js' 'bun run teaser-unoptimized.js'

Benchmark 1: bun run teaser-extended.js

Time (mean ± σ): 1.165 s ± 0.166 s [User: 0.009 s, System: 0.007 s]

Range (min … max): 1.001 s … 1.422 s 10 runs

Benchmark 2: bun run teaser-unoptimized.js

Time (mean ± σ): 3.363 s ± 0.277 s [User: 0.018 s, System: 0.003 s]

Range (min … max): 2.823 s … 3.769 s 10 runs

Summary

'bun run teaser-extended.js' ran

2.89 ± 0.47 times faster than 'bun run teaser-unoptimized.js'

Final remarks

There are a few things in the teaser code that are either "bugs" or just left out:

- The original code uses

.idon theResponseof the user fetch directly. For an API you would usually call.json()on it first - no error handling

- if there is no logged in user there is no ID

await (async () => {

try {

const userRes = await fetch(`/api/loggedInUser`);

const user = await userRes.json();

if (!user?.id) {

throw new Error(`No user id found`);

}

const responses = await Promise.all([

fetch(`/api/user/${user.id}/comments`),

fetch(`/api/user/${user.id}/books`),

]);

const [comments, books] = await Promise.all(

responses.map((res) => res.json())

);

console.log(books, comments);

} catch (error) {

console.error(error);

}

})();

Note: Top-level async used here